A Data-centric Framework for Improving Domain-specific Machine Reading Comprehension Datasets

Abstract

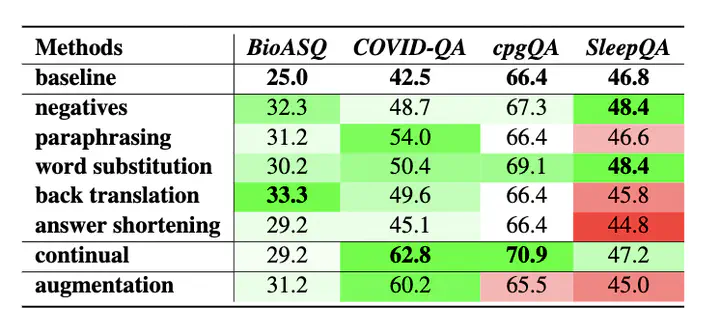

Low-quality data can cause downstream problems in high-stakes applications. Data-centric approach emphasizes on improving dataset quality to enhance model performance. High-quality datasets are needed for general-purpose Large Language Models (LLMs) training, as well as for domain-specific models, which are usually small in size as it is costly to engage a large number of domain experts for their creation. Thus, it is vital to ensure high-quality domain-specific training data. In this paper, we propose a framework for enhancing the data quality of original datasets. We applied the proposed framework to four biomedical datasets and showed relative improvement of up to 33%/40% for fine-tuning of retrieval/reader models on the BioASQ dataset when using back translation to enhance the original dataset quality.